Are traditional ‘best practices’ limiting the business potential for clients?

It’s an important question but not often asked. Having a set of best practices gives us confidence in our processes for execution with the promise of a strong research process plan delivering high quality data. But what if your best practices require you to exclude a key group of research participants by removing mobile audiences? How does that impact the data you’re collecting and do the results adequately reflect the marketplace opportunity?

A team of researchers in partnership with the GRBN (Global Business Research Network) are conducting research to assess the impact of excluding mobile audiences in pricing research.

Three considerations driving best practice decisions for online research

- Mobile survey participation is increasing but consistent representation is lagging

- Survey platforms are improving but multiplatform designs remain too few

- Sample representation and sourcing needs greater transparency

With the prevalence of mobile response and a renewed focus on the respondent experience, there’s an opportunity to revisit our assumptions for what research may be appropriate for certain devices.

Increase in Mobile Participation

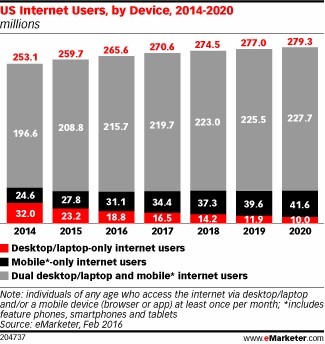

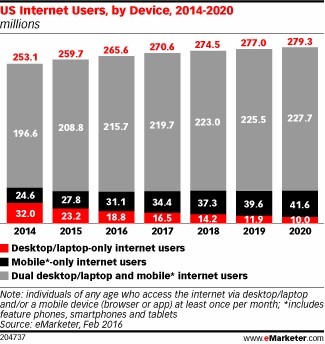

No surprise here. We’re tied to our phones and there are inherent opportunities associated with that level of access. The percentage of participants responding to a survey request on a mobile device continues to climb, and the increase is even greater among younger audiences.

While mobile access is on the rise, the focus should be on participant inclusion

regardless of device.

Survey Platforms

The industry is responding to the challenge of improving the respondent experience but there’s more work to do to improve the consistency of presentation of surveys across devices. Many platforms continue to render surveys differently for mobile participants or not render adequately for participant accessibility.

Researchers are doing a tremendous disservice to clients if they don’t optimize the respondent experience and aren’t focused enough on

inclusion of sample through platform design.

Sample Representation

How is your data different because mobile audiences weren’t included in the sample?

Sample companies have a responsibility to consult with clients about the potential inclusion or exclusion of groups due to poorly designed surveys. The exclusion of a portion of sample should never come as a surprise. Clients should understand the sample plan prior to research and be given time to make appropriate adjustments to maximize participation.

The opportunity is to improve the respondent experience through better platform and survey design and focus on widening the net of available participants in research. Higher quality data is the outcome and participant inclusion is imperative.

Revisiting Discrete Choice and Mobile Participation

Assumptions have been made over the past several years that certain types of surveys aren’t a good fit for a mobile audience given screen size and platform limitations. The limitations are still there with certain types of research, but as the levers have changed we have an opportunity to revisit what is deemed ‘best practice’ for specific types of research.

Discrete choice research presents some challenges and for many the default course of action has been to exclude mobile participants. Depending on the design, there can be a lot of information presented and too many options to display on a small screen. The presentation of questions can be inconsistent and could lead to different results by subsets of your sample.

As we consider the potential for mobile audiences and discrete choice, two questions come to mind.

- How does the discrete choice exercise render on a smaller screen?

- What is the impact on data if we exclude mobile participants from sample?

The focus to date has been on point #1 – the rendering of the survey exercise on the screen. If the exercise is different by device, we exclude the smaller screens and control the presentation of the exercises by limiting participation to laptop and desktop respondents. It then becomes ‘best practice’ to default to exclusion of mobile audiences for discrete choice studies. But we haven’t paid sufficient attention to point #2 – how the exclusion of the sample impacts the findings from research. How are insights limited as a result?

Thankfully platforms are improving to better support multiplatform surveys with a consistent display across devices. This means the presentation of the questions is the same on a phone as it is on a laptop or desktop, without the need to scroll or pinch to navigate the survey. We know this consistency is crucial to delivering data comparability.

The improvement of online survey platforms is no small achievement. It makes it possible to offer research to participants previously deemed a ‘bad fit’ for the design. What was once not a ‘best practice’ might now warrant consideration.

Pricing Research and Unintended Consequences

What if ‘best practice’ of excluding mobile participation in a discrete choice pricing study results in more conservative pricing recommendations?

Much the way we ask participants in discrete choice surveys to make ‘trade-off’ decisions over product features, researchers go through a similar series of ‘trade-off’ decisions to determine their optimal approach for executing research. Decisions regarding sample, programming, question types and exercises to include, incentives to offer, and other areas have a major impact on the potential for insights.

In the case of pricing research, these decisions on execution, specifically on the inclusion or exclusion of mobile audiences, may fundamentally change the recommendations from research.

Could pricing research without mobile audiences lead to more conservative or aggressive pricing decisions?

Research Plan and NEXT Presentation

Through our partnership with

Mindbody, a B2B software provider serving the wellness and fitness community, we intend to better understand how pricing decisions may be affected by the inclusion of mobile audiences.

Our team of researchers will present findings on this topic with the industry at the Insights Association NEXT Conference May 1

st, so we hope to see you there.

Research Team:

Andrew Cannon, GRBN

Dyna Boen, UBMobile

Lisa Wilding-Brown, Innovate MR

Bob Graff, MarketVision Research

David Lau, Mindbody

Bob Graff

MarketVision Research

No surprise here. We’re tied to our phones and there are inherent opportunities associated with that level of access. The percentage of participants responding to a survey request on a mobile device continues to climb, and the increase is even greater among younger audiences.

While mobile access is on the rise, the focus should be on participant inclusion regardless of device.

No surprise here. We’re tied to our phones and there are inherent opportunities associated with that level of access. The percentage of participants responding to a survey request on a mobile device continues to climb, and the increase is even greater among younger audiences.

While mobile access is on the rise, the focus should be on participant inclusion regardless of device.

The industry is responding to the challenge of improving the respondent experience but there’s more work to do to improve the consistency of presentation of surveys across devices. Many platforms continue to render surveys differently for mobile participants or not render adequately for participant accessibility.

Researchers are doing a tremendous disservice to clients if they don’t optimize the respondent experience and aren’t focused enough on inclusion of sample through platform design.

The industry is responding to the challenge of improving the respondent experience but there’s more work to do to improve the consistency of presentation of surveys across devices. Many platforms continue to render surveys differently for mobile participants or not render adequately for participant accessibility.

Researchers are doing a tremendous disservice to clients if they don’t optimize the respondent experience and aren’t focused enough on inclusion of sample through platform design.

Sample companies have a responsibility to consult with clients about the potential inclusion or exclusion of groups due to poorly designed surveys. The exclusion of a portion of sample should never come as a surprise. Clients should understand the sample plan prior to research and be given time to make appropriate adjustments to maximize participation.

The opportunity is to improve the respondent experience through better platform and survey design and focus on widening the net of available participants in research. Higher quality data is the outcome and participant inclusion is imperative.

Sample companies have a responsibility to consult with clients about the potential inclusion or exclusion of groups due to poorly designed surveys. The exclusion of a portion of sample should never come as a surprise. Clients should understand the sample plan prior to research and be given time to make appropriate adjustments to maximize participation.

The opportunity is to improve the respondent experience through better platform and survey design and focus on widening the net of available participants in research. Higher quality data is the outcome and participant inclusion is imperative.

In the case of pricing research, these decisions on execution, specifically on the inclusion or exclusion of mobile audiences, may fundamentally change the recommendations from research.

Could pricing research without mobile audiences lead to more conservative or aggressive pricing decisions?

In the case of pricing research, these decisions on execution, specifically on the inclusion or exclusion of mobile audiences, may fundamentally change the recommendations from research.

Could pricing research without mobile audiences lead to more conservative or aggressive pricing decisions?

Bob Graff

MarketVision Research

Bob Graff

MarketVision Research